The problem is that we have only articles but not their topics and we would like to have an algorithm that is able to sort documents into topics.

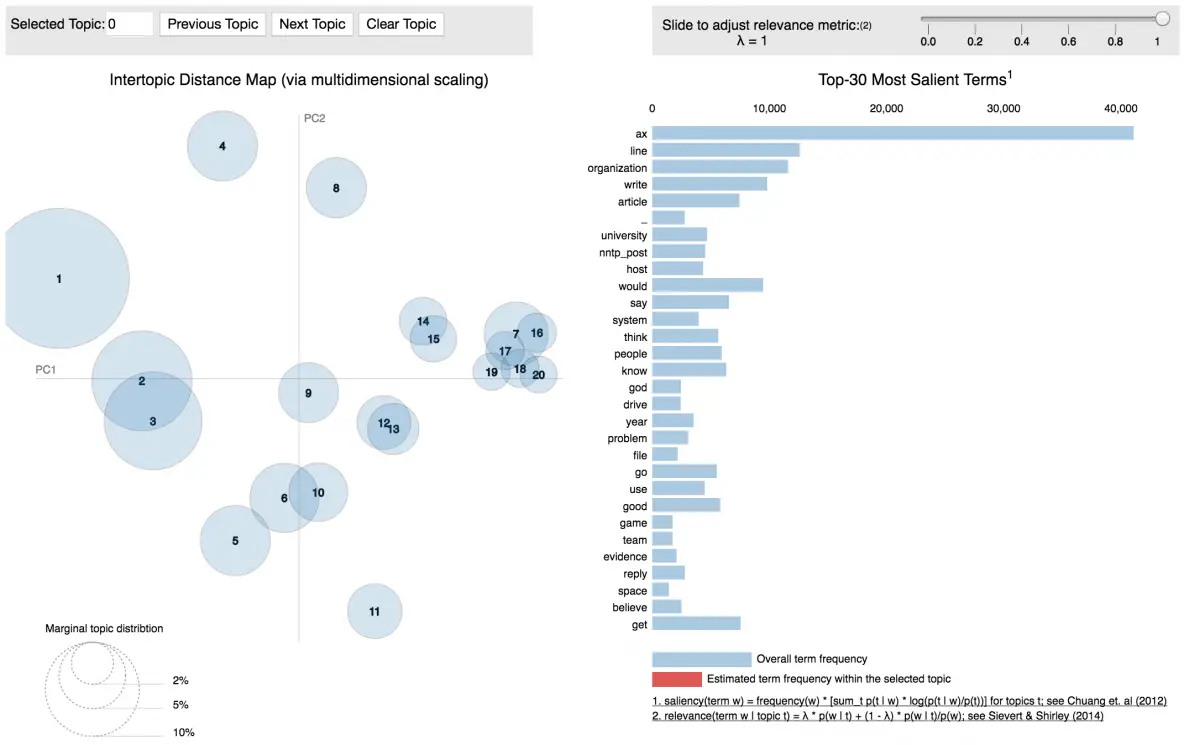

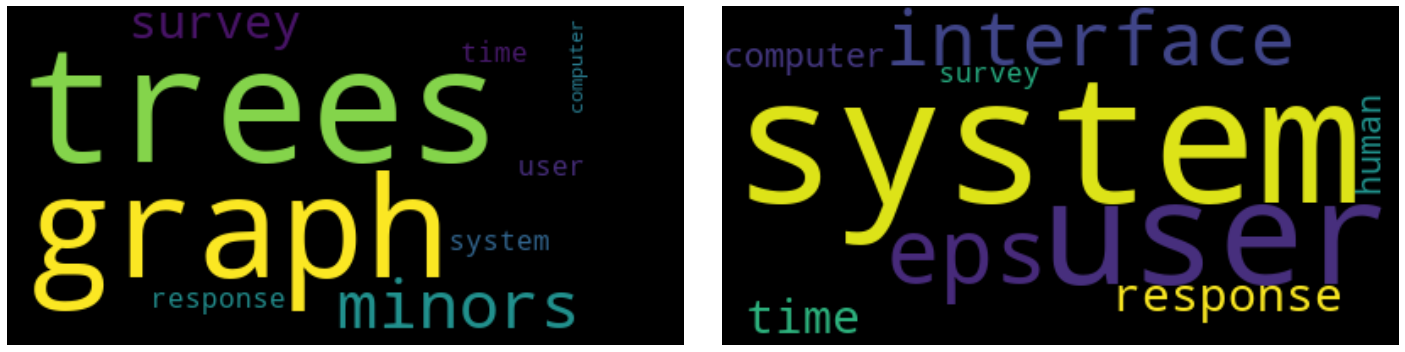

Also, some of the articles might have multiple topics. Each document has a topic such as computer science, physics, biology, etc. Thus, let’s imagine that we have a collection of documents or articles. It’s a type of topic modeling in which words are represented as topics, and documents are represented as a collection of these word topics.įor this purpose, we’ll describe the LDA through topic modeling. Latent Dirichlet Allocation (LDA) is an unsupervised clustering technique that is commonly used for text analysis. Some applications of topic modeling also include text summarization, recommender systems, spam filters, and similar. The reason topic modeling is useful is that it allows the user to not only explore what’s inside their corpus (documents) but also build new connections between topics they weren’t even aware of. In this article, we’ll focus on Latent Dirichlet Allocation (LDA). The current methods for extraction of topic models include Latent Dirichlet Allocation (LDA), Latent Semantic Analysis (LSA), Probabilistic Latent Semantic Analysis (PLSA), and Non-Negative Matrix Factorization (NMF). This gives a rough idea about topics in the document and where they rank on its hierarchy of importance. The model tries to find clusters of words that co-occur more frequently than they would otherwise expect due to chance alone. Also, topic modeling finds which words frequently co-occur with others and how often they appear together. Topics are found by analyzing the relationship between words in the corpus. By analyzing the frequency of words and phrases in the documents, it’s able to determine the probability of a word or phrase belonging to a certain topic and cluster documents based on their similarity or closeness.įirstly, topic modeling starts with a large corpus of text and reduces it to a much smaller number of topics. Also, we can use it to discover patterns of words in a collection of documents. The preprocess_string method makes it very easy to prepare text.Topic modeling is a natural language processing (NLP) technique for determining the topics in a document. Gensim’s website states it was “designed to process raw, unstructured digital texts” and it comes with a preprocessing module for just that purpose. Given the dataset of tweets, we will try to identify key topics or themes. It’s relatively small and easy to work with and covers a very diverse set of topics. We’ll continue using our Donald Trump Twitter dataset. To me, this makes it a no-brainer to use for topic modeling. In Gensim’s introduction it is described as being “designed to automatically extract semantic topics from documents, as efficiently (computer-wise) and painlessly (human-wise) as possible.” Gensim, a Python library, that identifies itself as “topic modelling for humans” helps make our task a little easier. Topic models can be useful in many scenarios, including text classification and trend detection.Ī major challenge, however, is to extract high quality, meaningful, and clear topics. It can be used for tasks ranging from clustering to dimensionality reduction. Topic modeling is a method for discovering topics that occur in a collection of documents.

0 kommentar(er)

0 kommentar(er)